Memory Management Unit

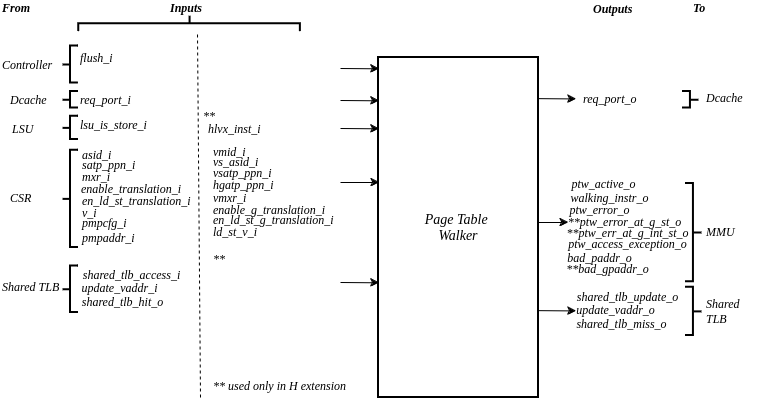

The Memory Management Unit (MMU) module is a crucial component in the RISC-V-based processor, serving as the backbone for virtual memory management and address translation.

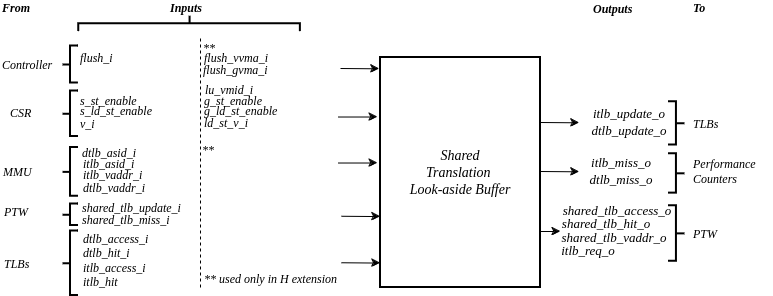

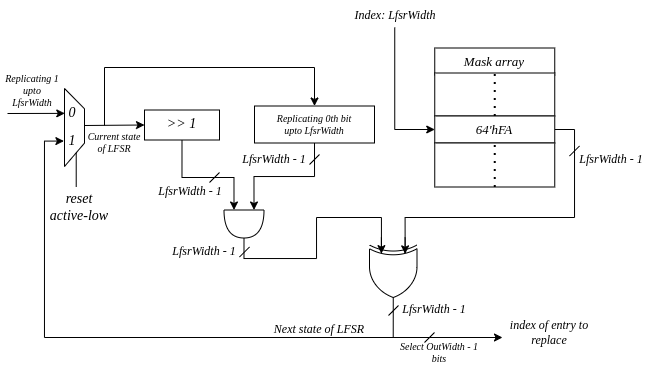

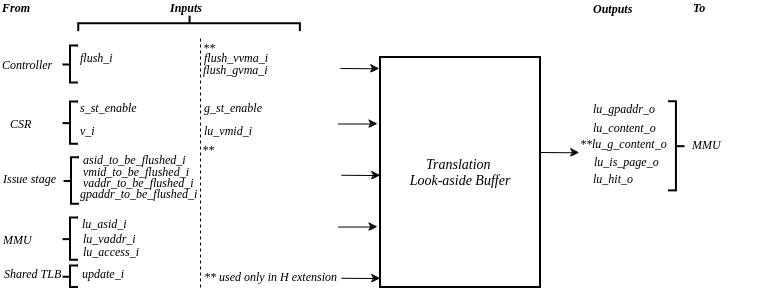

Figure 1: Inputs and Outputs of CVA6 MMU

At its core, the MMU plays a pivotal role in translating virtual addresses into their corresponding physical counterparts. This translation process is paramount for providing memory protection, isolation, and efficient memory management in modern computer systems. Importantly, it handles both instruction and data accesses, ensuring a seamless interaction between the processor and virtual memory. Within the MMU, several major blocks play pivotal roles in this address translation process. These includes:

Instruction TLB (ITLB)

Data TLB (DTLB)

Shared TLB (optional)

Page Table Walker (PTW)

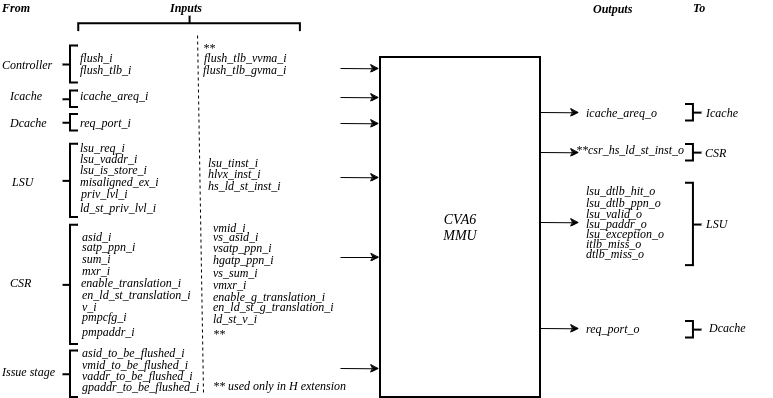

Figure 2: Major Blocks in CVA6 MMU

The MMU manages privilege levels and access control, enforcing permissions for user and supervisor modes while handling access exceptions. It employs Translation Lookaside Buffers (TLBs) for efficient address translation, reducing the need for page table access. TLB hits yield quick translations, but on misses, the shared TLB is consulted (when used), and if necessary, the Page Table Walker (PTW) performs page table walks, updating TLBs and managing exceptions during the process.

In addition to these functionalities, the MMU seamlessly integrates support for Physical Memory Protection (PMP), enabling it to enforce access permissions and memory protection configurations as specified by the PMP settings. This additional layer of security and control enhances the management of memory accesses

Instruction and Data InterfacesThe MMU maintains interfaces with the instruction cache (ICache) and the load-store unit (LSU). It receives virtual addresses from these components and proceeds to translate them into physical addresses, a fundamental task for ensuring proper program execution and memory access.

The MMU block can be parameterized to support sv32, sv39 and sv39x4 virtual memory. In CVA6, some of these parameters are configurable by the user, and some others are calculated depending on other parameters of the core. The available HW configuration parameters are the following:

List of configuration parameters for MMUParameter |

Description |

Type |

Possible values |

User config |

|---|---|---|---|---|

|

Number of entries in Instruction TLB |

Natural |

|

Yes |

|

Number of entries in Data TLB |

Natural |

|

Yes |

|

Size of shared TLB |

Natural |

|

Yes |

|

Indicates if the shared TLB optimization is used (1) or not (0) |

bit |

1 or 0 |

Yes |

|

Indicates if Hypervisor extension is used (1) or not (0) |

bit |

1 or 0 |

Yes |

|

Indicates the width of the Address Space Identifier (ASID) |

Natural |

|

No |

|

Indicates the width of the Virtual Machine space Identifier (VMID) - (used only when Hypervisor extension is enabled) |

Natural |

|

No |

|

Indicates the width of the Physical Page Number (PPN) |

Natural |

|

No |

|

Indicates the width of the Guest Physical Page Number (GPPN) - (used only when Hypervisor extension is enabled) |

Natural |

|

No |

|

Indicates the width of the Virtual Address Space |

Natural |

|

No |

|

Indicates the width of the extended Virtual Address Space when Hypervisor is used |

Natural |

|

No |

|

Length of Virtual Page Number (VPN) |

Natural |

|

No |

|

Number of page table levels |

Natural |

|

No |

Parameter |

sv32 |

sv39 |

sv39x4 |

|---|---|---|---|

|

2 |

16 |

16 |

|

2 |

16 |

16 |

|

64 |

64 |

64 |

|

1 |

0 |

0 |

|

0 |

0 |

1 |

|

9 |

16 |

16 |

|

7 |

14 |

14 |

|

22 |

44 |

44 |

|

22 |

29 |

29 |

|

32 |

39 |

39 |

|

34 |

41 |

41 |

|

20 |

27 |

29 |

|

2 |

3 |

3 |

Table 1: CVA6 MMU Input Output Signals

Signal |

IO |

Connection Type |

Type |

Description |

|---|---|---|---|---|

|

in |

Subsystem |

logic |

Subsystem Clock |

|

in |

Subsystem |

logic |

Asynchronous reset active low |

|

in |

Controller |

logic |

Sfence Committed |

|

in |

CSR RegFile |

logic |

Enables address translation request for instruction |

|

in |

CSR RegFile |

logic |

Enables virtual memory translation for instrucionts |

|

in |

CSR RegFile |

logic |

Enables address translation request for load or store |

|

in |

CSR RegFile |

logic |

Enables virtual memory translation for load or store |

|

in |

Cache Subsystem |

icache_arsp_t |

Icache Response |

|

out |

Cache Subsystem |

icache_areq_t |

Icache Request |

|

in |

Load Store Unit |

exception_t |

Indicate misaligned exception |

|

in |

Load Store Unit |

logic |

Request address translation |

|

in |

Load Store Unit |

logic [VLEN-1:0] |

Virtual Address In |

|

in |

Load Store Unit |

logic [31:0] |

Transformed Instruction In when Hypervisor Extension is enabled. Set to 0 (unused) when not. |

|

in |

Store Unit |

logic |

Translation is requested by a store |

|

out |

CSR RegFile |

logic |

Indicate a hypervisor load store instruction. |

|

out |

Store / Load Unit |

logic |

Indicate a DTLB hit |

|

out |

Load Unit |

logic [PPNW-1:0] |

Send PNN to LSU |

|

out |

Load Store Unit |

logic |

Indicate a valid translation |

|

out |

Store / Load Unit |

logic [PLEN-1:0] |

Translated Address |

|

out |

Store / Load Unit |

exception_t |

Address Translation threw an exception |

|

in |

CSR RegFile |

priv_lvl_t |

Privilege level for instruction fetch interface |

|

in |

CSR RegFile |

logic |

Virtualization mode state |

|

in |

CSR RegFile |

priv_lvl_t |

Indicates the virtualization mode at which load and stores should happen |

|

in |

CSR RegFile |

logic |

Virtualization mode state |

|

in |

CSR RegFile |

logic |

Supervisor User Memory Access bit in xSTATUS CSR register |

|

in |

CSR RegFile |

logic |

Virtual Supervisor User Memory Access bit in xSTATUS CSR register when Hypervisor extension is enabled. |

|

in |

CSR RegFile |

logic |

Make Executable Readable bit in xSTATUS CSR register |

|

in |

CSR RegFile |

logic |

Virtual Make Executable Readable bit in xSTATUS CSR register for virtual supervisor when Hypervisor extension is enabled. |

|

in |

Store / Load Unit |

logic |

Indicates that Instruction is a hypervisor load store with execute permissions |

|

in |

CSR RegFile |

logic |

Indicates that Instruction is a hypervisor load store instruction |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from SATP register |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from VSATP register when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from HGATP register when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic [ASIDW-1:0] |

ASID for the lookup |

|

in |

CSR RegFile |

logic [ASIDW-1:0] |

ASID for the lookup for virtual supervisor when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic [VMIDW-1:0] |

VMID for the lookup when Hypervisor extension is enabled |

|

in |

Execute Stage |

logic [ASIDW-1:0] |

ASID of the entry to be flushed |

|

in |

Execute Stage |

logic [VMIDW-1:0] |

VMID of the entry to be flushed when Hypervisor extension is enabled |

|

in |

Execute Stage |

logic [VLEN-1:0] |

Virtual address of the entry to be flushed |

|

in |

Execute Stage |

logic [GPLEN-1:0] |

Virtual address of the guest entry to be flushed when Hypervisor extension is enabled |

|

in |

Controller |

logic |

Indicates SFENCE.VMA committed |

|

in |

Controller |

logic |

Indicates SFENCE.VVMA committed |

|

in |

Controller |

logic |

Indicates SFENCE.GVMA committed |

|

out |

Performance Counter |

logic |

Indicate an ITLB miss |

|

out |

Performance Counter |

logic |

Indicate a DTLB miss |

|

in |

Cache Subsystem |

dcache_req_o_t |

D Cache Data Requests |

|

out |

Cache Subsystem |

dcache_req_i_t |

D Cache Data Response |

|

in |

CSR RegFile |

pmpcfg_t [15:0] |

PMP configurations |

|

in |

CSR RegFile |

logic [15:0][PLEN-3:0] |

PMP Address |

Table 2: I Cache Request Struct (icache_areq_t)

Signal |

Type |

Description |

|---|---|---|

|

logic |

Address Translation Valid |

|

logic [PLEN-1:0] |

Physical Address In |

|

exception_t |

Exception occurred during fetch |

Table 3: I Cache Response Struct (icache_arsq_t)

Signal |

Type |

Description |

|---|---|---|

|

logic |

Address Translation Request |

|

logic [VLEN-1:0] |

Virtual Address out |

Table 4: Exception Struct (exception_t)

Signal |

Type |

Description |

|---|---|---|

|

logic [XLEN-1:0] |

Cause of exception |

|

logic [XLEN-1:0] |

Additional information of causing exception (e.g. instruction causing it), address of LD/ST fault |

|

logic [GPLEN-1:0] |

Additional information when the causing exception in a guest exception (used only in hypervisor mode) |

|

logic [31:0] |

Transformed instruction information |

|

logic |

Signals when a guest virtual address is written to tval |

|

logic |

Indicate that exception is valid |

Table 5: PMP Configuration Struct (pmpcfg_t)

Signal |

Type |

Description |

|---|---|---|

|

logic |

Lock this configuration |

|

logic[1:0] |

Reserved bits in pmpcfg CSR |

|

pmp_addr_mode_t |

Addressing Modes: OFF, TOR, NA4, NAPOT |

|

pmpcfg_access_t |

None, read, write, execute |

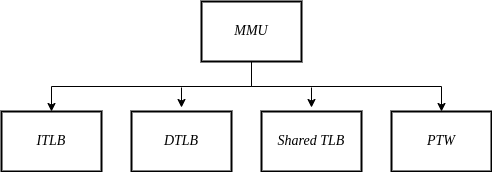

Figure 3: Control Flow in CVA6 MMU

Two potential exception sources exist:

Hardware Page Table Walker (HPTW) throwing an exception, signifying a page fault exception.

Access error due to insufficient permissions of PMP, known as an access exception.

The IF stage initiates a request to retrieve memory content at a specific virtual address. When the MMU is disabled, the instruction fetch request is directly passed to the I$ without modifications.

Address Translation in Instruction InterfaceIf virtual memory translation is enabled for instruction fetches, the following operations are performed in the instruction interface:

Compatibility of requested virtual address with selected page based address translation scheme is checked.

For page translation, the module determines the fetch physical address by combining the physical page number (PPN) from ITLB content and the offset from the virtual address. When hypervisor mode is enabled, the PPN from ITLB content is taken from the stage that is currently doing the translation.

Depending on the size of the identified page the PPN of the fetch physical address is updated with the corresponding bits of the VPN to ensure alignment for superpage translation.

If the Instruction TLB (ITLB) lookup hits, the fetch valid signal (which indicates a valid physical address) is activated in response to the input fetch request. Memory region accessibility is checked from the perspective of the fetch operation, potentially triggering a page fault exception in case of an access error or insufficient PMP permission.

In case of an ITLB miss, if the page table walker (PTW) is active (only active if there is a shared TLB miss or the shared TLB is not used) and handling instruction fetches, the fetch valid signal is determined based on PTW errors or access exceptions.

If the fetch physical address doesn’t match any execute region, an Instruction Access Fault is raised. When not translating, PMPs are immediately checked against the physical address for access verification.

Data InterfaceAddress Translation in Data InterfaceIf address translation is enabled for load or store, and no misaligned exception has occurred, the following operations are performed in the data interface:

Initially, translation is assumed to be invalid, signified by the MMU to LSU.

The translated physical address is formed by combining the PPN from the Page Table Entry (PTE) and the offset from the virtual address requiring translation. This is sent one cycle later due to the additional bank of registers which delayed the MMU’s answer. The PPN from the PTE is also shared separately with LSU in the same cycle as the hit. When hypervisor mode is enabled, the PPN from the PTE is taken from the stage that is currently doing the translation.

In the case of superpage translation, the PPN of the translated physical address and the separately shared PPN are updated with the VPN of the virtual address.

If a Data TLB (DTLB) hit occurs, it indicates a valid translation, and various fault checks are performed depending on whether it’s a load or store request.

For store requests, if the page is not writable, the dirty flag isn’t set, or privileges are violated, it results in a page fault corresponding to the store access. If PMPs are also violated, it leads to an access fault corresponding to the store access. Page faults take precedence over access faults.

For load requests, a page fault is triggered if there are insufficient access privileges. PMPs are checked again during load access, resulting in an access fault corresponding to load access if PMPs are violated.

In case of a DTLB miss, potential exceptions are monitored during the page table walk. If the PTW indicates a page fault, the corresponding page fault related to the requested type is signaled. If the PTW indicates an access exception, the load access fault is indicated through address translation because the page table walker can only throw load access faults.

Address Translation is DisabledWhen address translation is not enabled, the physical address is immediately checked against Physical Memory Protections (PMPs). If there is a request from LSU, no misaligned exception, and PMPs are violated, it results in an access fault corresponding to the request being indicated.

Translation Lookaside Buffer

Page tables are accessed for translating virtual memory addresses to physical memory addresses. This translation needs to be carried out for every load and store instruction and also for every instruction fetch. Since page tables are resident in physical memory, accessing these tables in all these situations has a significant impact on performance. Page table accesses occur in patterns that are closely related in time. Furthermore, the spatial and temporal locality of data accesses or instruction fetches mean that the same page is referenced repeatedly. Taking advantage of these access patterns the processor keeps the information of recent address translations, to enable fast retrieval, in a small cache called the Translation Lookaside Buffer (TLB) or an address-translation cache.

The CVA6 TLB is structured as a fully associative cache, where the virtual address that needs to be translated is compared against all the individual TLB entries. Given a virtual address, the processor examines the TLB (TLB lookup) to determine if the virtual page number (VPN) of the page being accessed is in the TLB. When a TLB entry is found (TLB hit), the TLB returns the corresponding physical page number (PPN) which is used to calculate the target physical address. If no TLB entry is found (TLB miss) the processor has to read individual page table entries from memory (Table walk). In CVA6 table walking is supported by dedicated hardware. Once the processor finishes the table walk, it has the Physical Page Number (PPN) corresponding to the Virtual Page Number (VPN) that needs to be translated. The processor adds an entry for this address translation to the TLB so future translations of that virtual address will happen quickly through the TLB. During the table walk the processor may find out that the corresponding physical page is not resident in memory. At this stage a page table exception (Page Fault) is generated which gets handled by the operating system. The operating system places the appropriate page in memory, updates the appropriate page tables and returns execution to the instruction which generated the exception.

The input and output signals of the TLB are shown in the following figure.

Figure 4: Inputs and Outputs of CVA6 TLB

Table 6: CVA6 TLB Input Output Signals

Signal |

IO |

connection |

Type |

Description |

|

in |

SUBSYSTEM |

logic |

Subsystem Clock |

|

in |

SUBSYSTEM |

logic |

Asynchronous reset active low |

|

in |

Controller |

logic |

SFENCE.VMA Committed |

|

in |

Controller |

logic |

SFENCE.VVMA Committed |

|

in |

Controller |

logic |

SFENCE.GVMA Committed |

|

in |

Controller |

logic |

Indicates address translation request (s-stage) - used only in Hypervisor mode |

|

in |

Controller |

logic |

Indicates address translation request (g-stage) - used only in Hypervisor mode |

|

in |

Controller |

logic |

Indicates the virtualization mode state - used only in Hypervisor mode |

|

in |

Shared TLB |

tlb_update_cva6_t |

Updated tag and content of TLB |

|

in |

Cache Subsystem |

logic |

Signal indicating a lookup access is being requested |

|

in |

CVA6 MMU |

logic[ASIDW-1:0] |

ASID for the lookup |

|

in |

CVA6 MMU |

logic[VMIDW-1:0] |

VMID for the lookup when Hypervisor extension is enabled. |

|

in |

Cache Subsystem |

logic[VLEN-1:0] |

Virtual address for the lookup |

|

out |

CVA6 MMU |

pte_cva6_t |

Output for the content of the TLB entry |

|

out |

CVA6 MMU |

pte_cva6_t |

Output for the content of the TLB entry (g-stage) |

|

in |

Execute Stage |

logic [ASIDW-1:0] |

ASID of the entry to be flushed. Vector 1 is the analogous one for virtual supervisor when Hypervisor extension is enabled |

|

in |

Execute Stage |

logic [VMIDW-1:0] |

VMID of the entry to be flushed when Hypervisor extension is enabled |

|

in |

Execute Stage |

logic [VLEN-1:0] |

Virtual address of the entry to be flushed. Vector 1 is the analogous one for virtual supervisor when Hypervisor extension is enabled. |

|

in |

Execute Stage |

logic [VLEN-1:0] |

Virtual address of the guest entry to be flushed when Hypervisor extension is enabled. |

|

out |

CVA6 MMU |

logic [PT_LEVELS-2:0] |

Output indicating whether the TLB entry corresponds to any page at the different page table levels |

|

out |

CVA6 MMU |

logic |

Output indicating whether the lookup resulted in a hit or miss |

Table 7: CVA6 TLB Update Struct (tlb_update_cva6_t)

Signal |

Type |

Description |

|

logic |

Indicates whether the TLB update entry is valid or not |

|

logic [PtLevels-2:0][HYP_EXT:0] |

Indicates if the TLB entry corresponds to a any page at the different levels. When Hypervisor extension is used it includes information also for the G-stage. |

|

logic[VPN_LEN-1:0] |

Virtual Page Number (VPN) used for updating the TLB |

|

logic[ASIDW-1:0] |

ASID used for updating the TLB |

|

logic[VMIDW-1:0] |

VMID used for updating the TLB when Hypervisor extension is enabled |

|

logic [HYP_EXT*2:0] |

Used only in Hypervisor mode. Indicates for which stage the translation was requested and for which it is therefore valid (s_stage, g_stage or virtualization enabled). |

|

pte_cva6_t |

Content of the TLB update entry |

|

pte_cva6_t |

Content of the TLB update entry for g stage when Hypervisor extension is enabled |

Table 8: CVA6 PTE Struct (pte_cva6_t)

Signal |

Type |

Description |

|---|---|---|

|

logic[PPNW-1:0] |

Physical Page Number (PPN) |

|

logic[1:0] |

Reserved for use by supervisor software |

|

logic |

Dirty bit indicating whether the page has been modified (dirty) or not

0: Page is clean i.e., has not been written

1: Page is dirty i.e., has been written

|

|

logic |

Accessed bit indicating whether the page has been accessed

0: Virtual page has not been accessed since the last time A bit was cleared

1: Virtual page has been read, written, or fetched from since the last time the A bit was cleared

|

|

logic |

Global bit marking a page as part of a global address space valid for all ASIDs

0: Translation is valid for specific ASID

1: Translation is valid for all ASIDs

|

|

logic |

User bit indicating privilege level of the page

0: Page is not accessible in user mode but in supervisor mode

1: Page is accessible in user mode but not in supervisor mode

|

|

logic |

Execute bit which allows execution of code from the page

0: Code execution is not allowed

1: Code execution is permitted

|

|

logic |

Write bit allows the page to be written

0: Write operations are not allowed

1: Write operations are permitted

|

|

logic |

Read bit allows read access to the page

0: Read operations are not allowed

1: Read operations are permitted

|

|

logic |

Valid bit indicating the page table entry is valid

0: Page is invalid i.e. page is not in DRAM, translation is not valid

1: Page is valid i.e. page resides in the DRAM, translation is valid

|

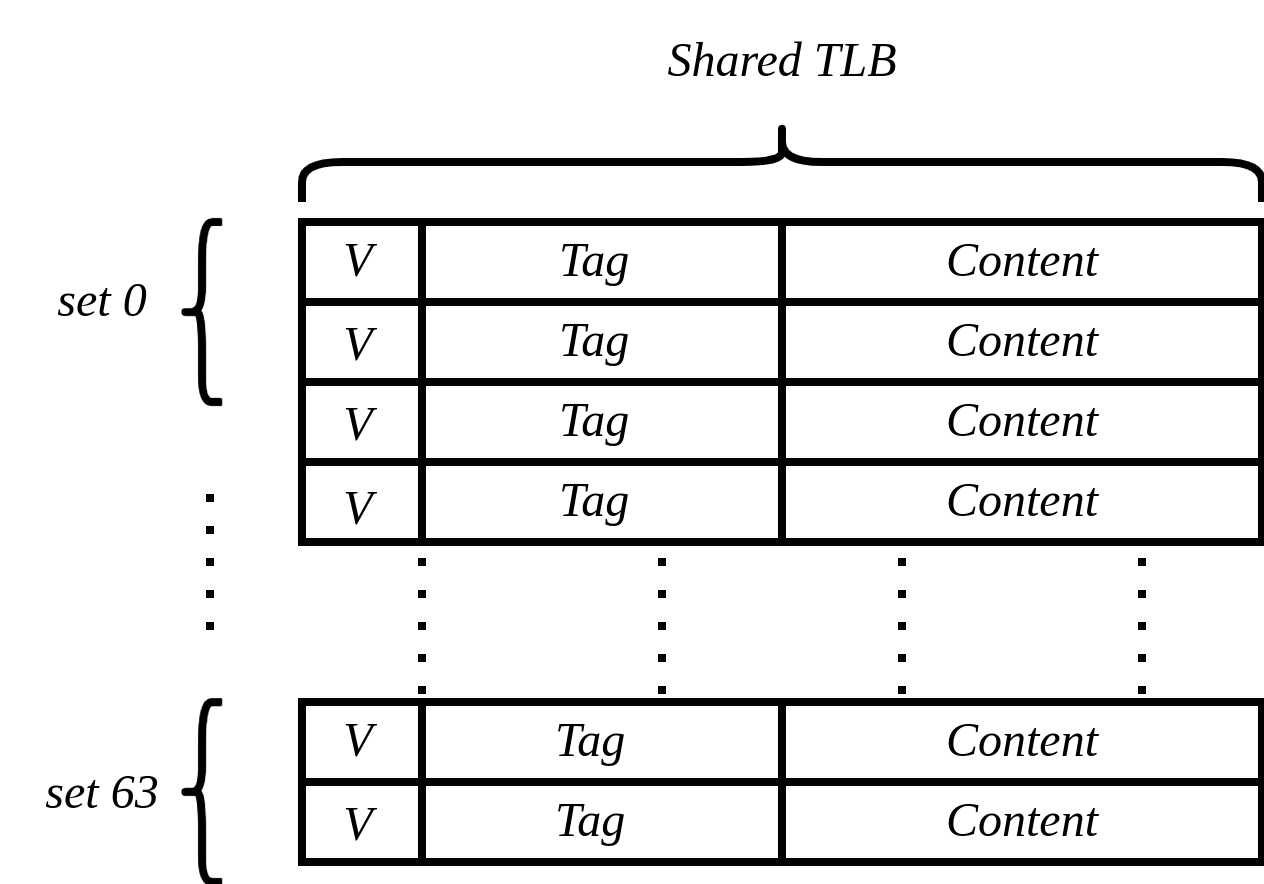

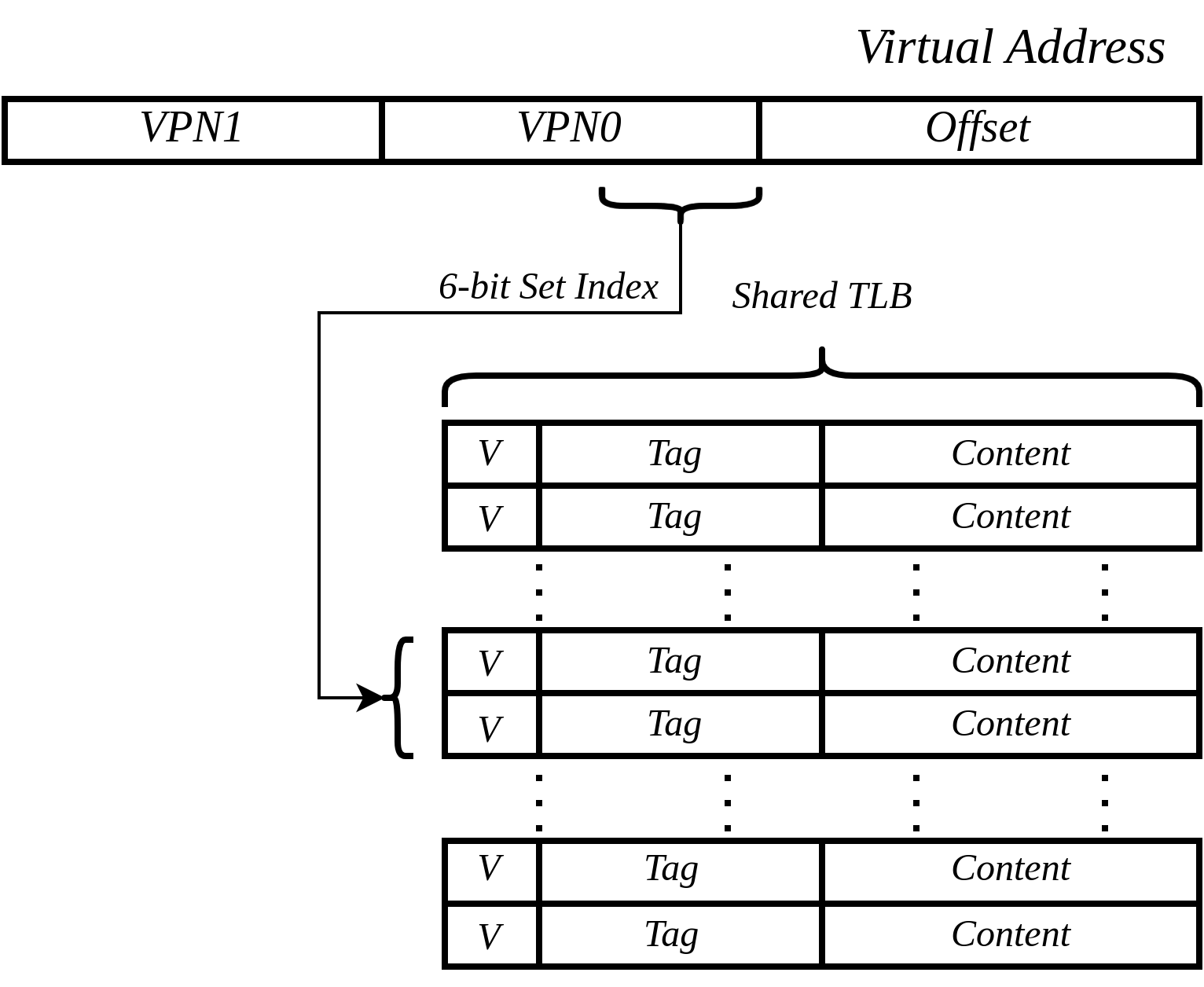

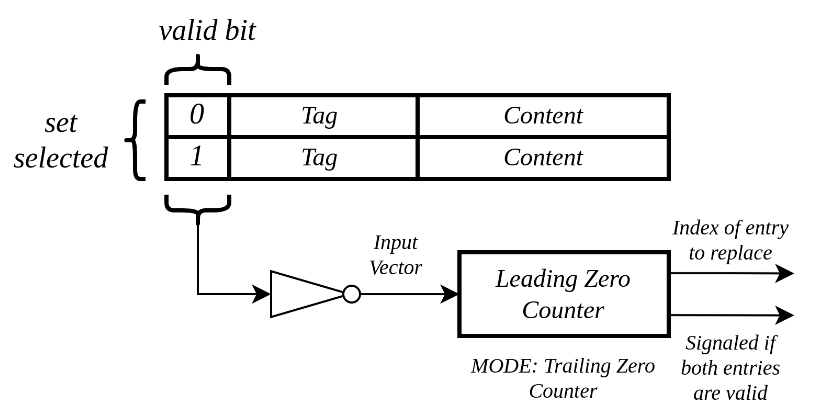

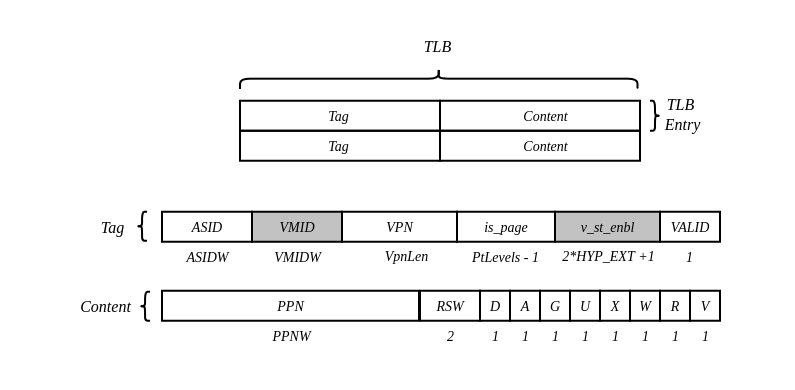

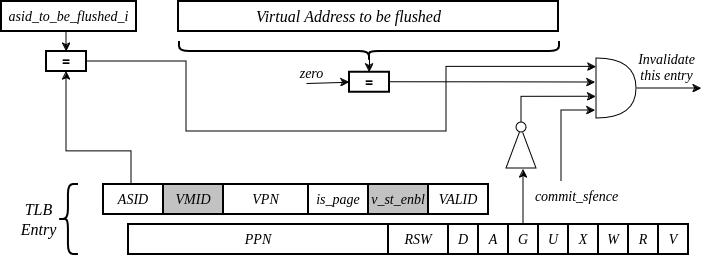

The number of TLB entries can be changed via a design parameter. Each TLB entry is made up of two fields: Tag and Content. The Tag field holds the virtual page number, ASID, VMID and page size along with a valid bit (VALID) indicating that the entry is valid, and a v_st_enbl field that indicates at which level the translation is valid when hypervisor mode is enabled. The virtual page number, is further split into several separate virtual page numbers according to the number of PtLevels used in each configuration. The Content field contains the physical page numbers along with a number of bits which specify various attributes of the physical page. Note that the V bit in the Content field is the V bit which is present in the page table in memory. It is copied from the page table, as is, and the VALID bit in the Tag is set based on its value. g_content is the equivalent for the g-stage translation. The TLB entry fields are shown in this Figure. Fields colored in grey are used only when H extension is implemented.

Figure 5: Fields in CVA6 TLB entry

The CVA6 TLB implements the following three functions:

Translation: This function implements the address lookup and match logic.

Update and Flush: This function implements the update and flush logic.

Pseudo Least Recently Used Replacement Policy: This function implements the replacement policy for TLB entries.

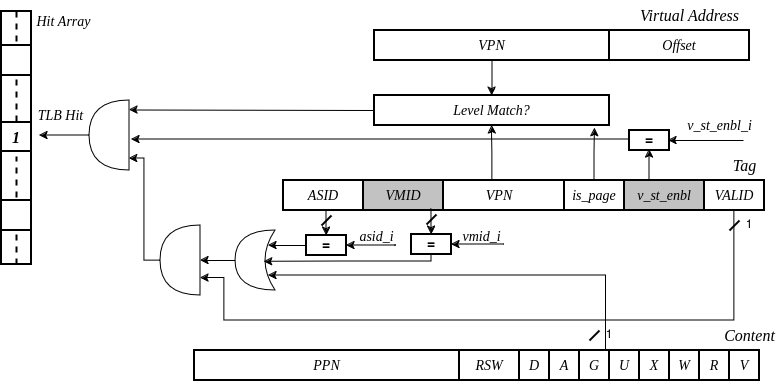

This function takes in the virtual address and certain other fields, examines the TLB to determine if the virtual page number of the page being accessed is in the TLB or not. If a TLB entry is found (TLB hit), the TLB returns the corresponding physical page number (PPN) which is then used to calculate the target physical address. The following checks are done as part of this lookup function to find a match in the TLB:

Validity Check: For a TLB hit, the associated TLB entry must be valid .

ASID, VMID and Global Flag Check: The TLB entry’s ASID must match the given ASID (ASID associated with the Virtual address). If the TLB entry’s Global bit (G) is set then this check is not done. This ensures that the translation is either specific to the provided ASID, or it is globally applicable. When the hypervisor extension is enabled, either the ASID or the VMID in the TLB entry must match ASID and/or VMID in the input, depending on which level(s) of translation is enabled. For the VMID the check is always applicable, regardless of G bit in the TLB entry.

Level VPN match: CVA6 implements a multi-level page table. As such the virtual address is broken up into multiple parts which are the virtual page number used in the different levels. So the condition that is checked next is that the virtual page number of the virtual address matches the virtual page number of the TLB entry at each level.

Page match: Without Hypervisor extension, there is a match at a certain level X if the is_page component of the tag is set to 1 at level PtLevels-X. At level 0 page_match is always set to 1. When Hypervisor extension is enabled, the conditions to give a page match vary depending on the level we are at. At the highest level (PT_LEVELS -1), there is a page match at g and s stage (when enabled) if the corresponding is_page bit is set. If a stage is not enabled the is_page is considered a 1. At level 0, page_match is always 1 as in the case of no hypervisor extension. In the intermediate levels, the page_match is determined using the merged final translation size from both stages and their enable signals. Level match The last condition to be checked at each page level, for a TLB hit, is that there is a vpn match for the current level and the higher ones, together with a page match at the current one. E.g. If PtLevels=2, a match at level 2 will occur if there is a VPN match at level 2 and a page match at level 2. For level 1, there will be a match if there is a VPN match at levels 2 and 1, together with a page match at level 1.

All the conditions listed above are checked against every TLB entry. If there is a TLB hit then the corresponding bit in the hit array is set. The following Figure Illustrates the TLB hit/miss process listed above.

Figure 6: Block diagram of CVA6 TLB hit or miss

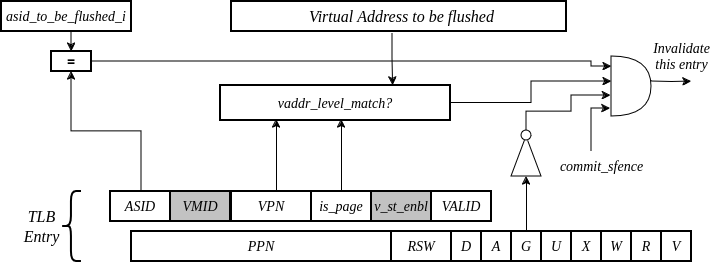

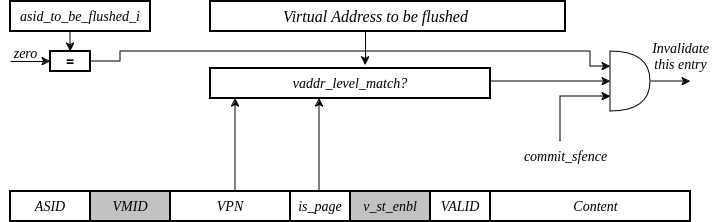

The SFENCE.VMA instruction can be used with certain specific source register specifiers (rs1 & rs2) to flush a specific TLB entry, some set of TLB entries or all TLB entries. Like all instructions this action only takes place when the SFENCE.VMA instruction is committed (shown via the commit_sfence signal in the following figures.) The behavior of the instruction is as follows:

If rs1 is not equal to x0 and rs2 is not equal to x0: Invalidate all TLB entries which contain leaf page table entries corresponding to the virtual address in rs1 (shown below as Virtual Address to be flushed) and that match the address space identifier as specified by integer register rs2 (shown below as asid_to_be_flushed_i), except for entries containing global mappings. This is referred to as the “SFENCE.VMA vaddr asid” case.

Figure 7: Invalidate TLB entry if ASID and virtual address match

If rs1 is equal to x0 and rs2 is equal to x0: Invalidate all TLB entries for all address spaces. This is referred to as the “SFENCE.VMA x0 x0” case.

Figure 8: Invalidate all TLB entries if both source register specifiers are x0

If rs1 is not equal to x0 and rs2 is equal to x0: invalidate all TLB entries that contain leaf page table entries corresponding to the virtual address in rs1, for all address spaces. This is referred to as the “SFENCE.VMA vaddr x0” case.

Figure 9: Invalidate TLB entry with matching virtual address for all address spaces

If rs1 is equal to x0 and rs2 is not equal to x0: Invalidate all TLB entries matching the address space identified by integer register rs2, except for entries containing global mappings. This is referred to as the “SFENCE.VMA 0 asid” case.

Figure 10: Invalidate TLB entry for matching ASIDs

The TLB fully implements the supervisor flush instructions, i.e., hfence, including filtering by ASID and virtual address. To support nested translation,it supports the two translation stages, including access permissions (rwx) and VMIDs. This is done analogously to the fence cases explained above.

HFENCE.VVMA vaddr asid case: Invalidate all TLB entries which contain leaf page table entries corresponding to the Virtual Address to be flushed and that match the address space identifiers as specified by ASID_to_be_flushed_i and lu_VMID, except for entries containing global mappings.

HFENCE.VVMA x0 x0 case: Invalidate all TLB entries for all address spaces if current VMID matches and ASID is 0 and vaddr to be flushed is 0.

HFENCE.VVMA vaddr x0 case: Invalidate all TLB entries which contain leaf page table entries corresponding to the Virtual Address to be flushed if current VMID matches and ASID to be flushed is 0.

HFENCE.VVMA x0 asid case: Invalidate all TLB entries matching the address space identified by ASID_to_be_flushed_i and lu_VMID when vaddr to be flushed is 0, except for entries containing global mappings.

HFENCE.GVMA gpaddr vmid case: Invalidate all TLB entries which contain leaf page table entries corresponding to the gpaddr to be flushed and that match the VMID.

HFENCE.GVMA x0 x0 case: Invalidate all TLB entries for all address spaces if VMID is 0 and gpaddr to be flushed is 0.

HFENCE.GVMA gpaddr x0 case: Invalidate all TLB entries which contain leaf page table entries corresponding to the gpaddr to be flushed if VMID to be flushed is 0.

HFENCE.GVMA x0 vmid case: Invalidate all TLB entries matching the address space identified by VMID_to_be_flushed_i when gpaddr to be flushed is 0.

When a TLB valid update request is signaled by the shared TLB, and the replacement policy select the update of a specific TLB entry, the corresponding entry’s tag is updated with the new tag, and its associated content is refreshed with the information from the update request. This ensures that the TLB entry accurately reflects the new translation information.

Pseudo Least Recently Used Replacement PolicyCache replacement algorithms are used to determine which TLB entry should be replaced, because it is not likely to be used in the near future. The Pseudo-Least-Recently-Used (PLRU) is a cache entry replacement algorithm, derived from Least-Recently-Used (LRU) cache entry replacement algorithm, used by the TLB. Instead of precisely tracking recent usage as the LRU algorithm does, PLRU employs an approximate measure to determine which entry in the cache has not been recently used and as such can be replaced.

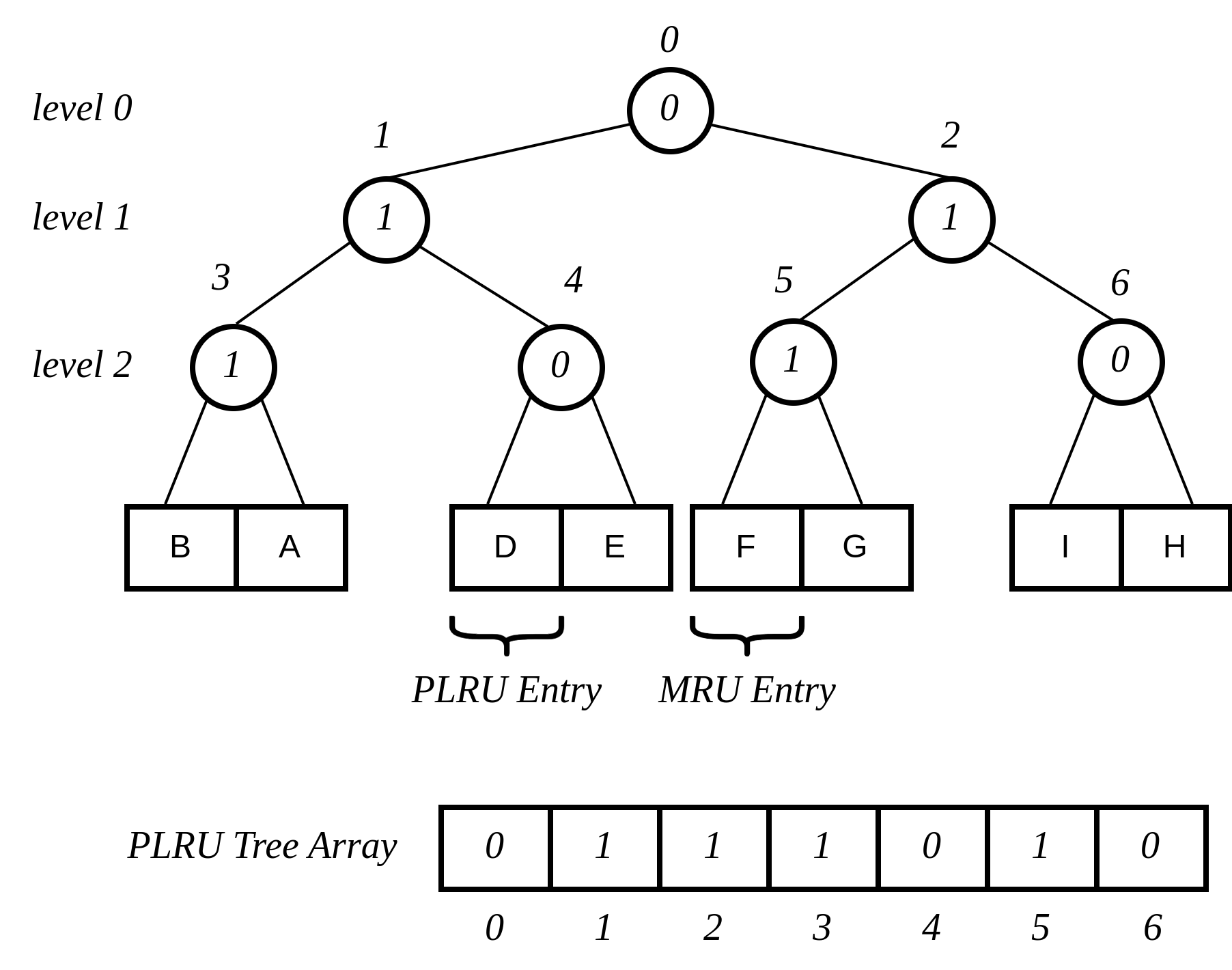

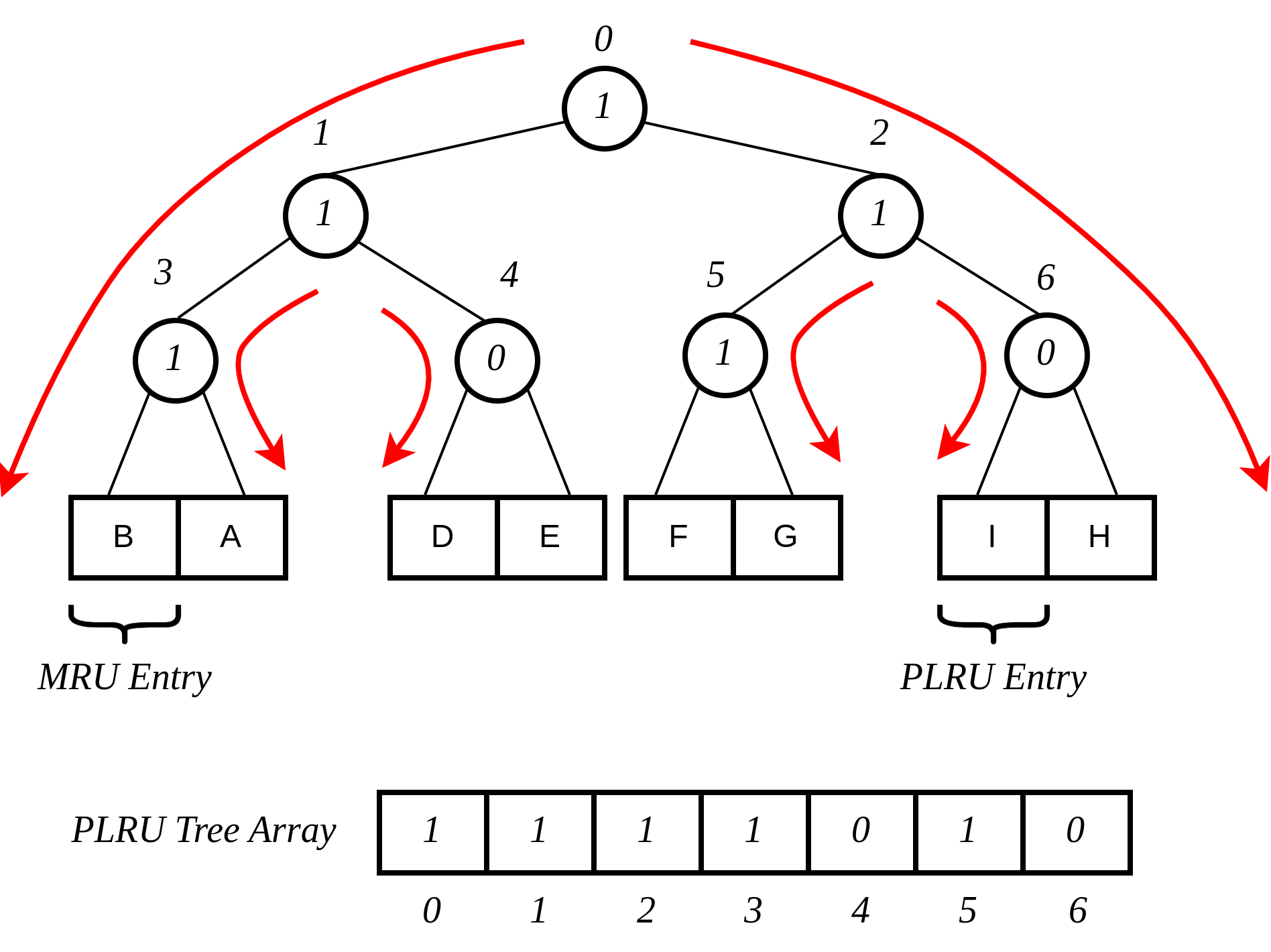

CVA6 implements the PLRU algorithm via the Tree-PLRU method which implements a binary tree. The TLB entries are the leaf nodes of the tree. Each internal node, of the tree, consists of a single bit, referred to as the state bit or plru bit, indicating which subtree contains the (pseudo) least recently used entry (the PLRU); 0 for the left hand tree and 1 for the right hand tree. Following this traversal, the leaf node reached, corresponds to the PLRU entry which can be replaced. Having accessed an entry (so as to replace it) we need to promote that entry to be the Most Recently Used (MRU) entry. This is done by updating the value of each node along the access path to point away from that entry. If the accessed entry is a right child i.e., its parent node value is 1, it is set to 0, and if the parent is the left child of its parent (the grandparent of the accessed node) then its node value is set to 1 and so on all the way up to the root node.

The PLRU binary tree is implemented as an array of node values. Nodes are organized in the array based on levels, with those from lower levels appearing before higher ones. Furthermore those on the left side of a node appear before those on the right side of a node. The figure below shows a tree and the corresponding array.

Figure 11: PLRU Tree Indexing

For n-way associative, we require n - 1 internal nodes in the tree. With those nodes, two operations need to be performed efficiently.

Promote the accessed entry to be MRU

Identify which entry to replace (i.e. the PLRU entry)

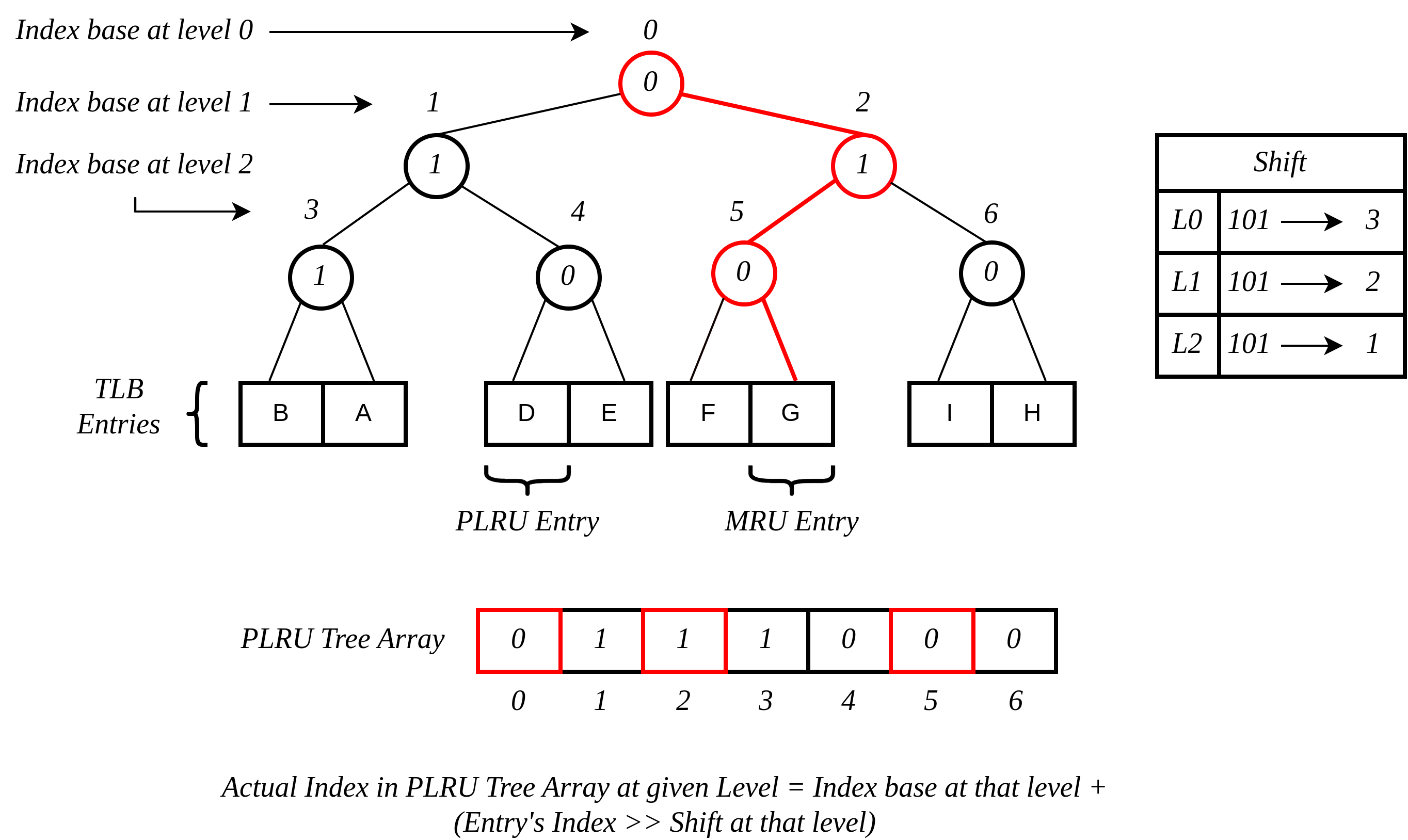

For a TLB entry which is accessed, the following steps are taken to make it the MRU:

Iterate through each level of the binary tree.

Calculate the index of the leftmost child within the current level. Let us call that index the index base.

Calculate the shift amount to identify the relevant node based on the level and TLB entry index.

Calculate the new value that the node should have in order to make the accessed entry the Most Recently Used (MRU). The new value of the root node is the opposite of the TLB entry index, MSB at the root node, MSB - 1 at node at next level and so on.

Assign this new value to the relevant node, ensuring that the hit entry becomes the MRU within the binary tree structure.

At level 0, no bit of the TLB entry’s index determines the offset from the index base because it’s a root node. At level 1, MSB of entry’s index determines the amount of offset from index base at that level. At level 2, the first two bits of the entry’s index from MSB side determine the offset from the index base because there are 4 nodes at the level 2 and so on.

Figure 12: Promote Entry to be MRU

In the above figure entry at index 5, is accessed. To make it MRU entry, every node along the access path should point away from it. Entry 5 is a right child, therefore, its parent plru bit set to 0, its parent is a left child, its grand parent’s plru bit set to 1, and great grandparent’s plru bit set to 0.

Entry Selection for ReplacementEvery TLB entry is checked for the replacement entry. The following steps are taken:

Iterate through each level of the binary tree.

Calculate the index of the leftmost child within the current level. Let us call that index the index base.

Calculate the shift amount to identify the relevant node based on the level and TLB entry index.

If the corresponding bit of the entry’s index matches the value of the node being traversed at the current level, keep the replacement signal high for that entry; otherwise, set the replacement signal to low.

Figure 13: Possible path traverse for entry selection for replacement

Figure shows every possible path that traverses to find out the PLRU entry. If the plru bit at each level matches with the corresponding bit of the entry’s index, that’s the next entry to replace. Below Table shows the entry selection for replacement.

Table 9: Entry Selection for Reaplacement

Path Traverse |

PLRU Bits |

Entry to replace |

0 -> 1 -> 3 |

000 |

0 |

001 |

1 |

|

0 -> 1 -> 4 |

010 |

2 |

011 |

3 |

|

0 -> 2 -> 5 |

100 |

4 |

101 |

5 |

|

0 -> 2 -> 6 |

110 |

6 |

111 |

7 |

Page Table Walker

The “CVA6 Page Table Walker (PTW)” is a hardware module designed to facilitate the translation of virtual addresses into physical addresses, a crucial task in memory access management. The Hypervisor extension specifies a new translation stage (G-stage) to translate guest-physical addresses into host-physical addresses.

Figure 19: Input and Outputs of Page Table Walker

The PTW module operates through various states, each with its specific function, such as handling memory access requests, validating page table entries, and responding to errors.

Key Features and CapabilitiesKey features of this PTW module include support for multiple levels of page tables (PtLevels), accommodating instruction and data page table walks. It rigorously validates and verifies page table entries (PTEs) to ensure translation accuracy and adherence to access permissions. This module seamlessly integrates with the CVA6 processor’s memory management unit (MMU), which governs memory access control. It also takes into account global mapping, access flags, and privilege levels during the translation process, ensuring that memory access adheres to the processor’s security and privilege settings.

Exception HandlingIn addition to its translation capabilities, the PTW module is equipped to detect and manage errors, including page-fault exceptions and access exceptions, contributing to the robustness of the memory access system. It works harmoniously with physical memory protection (PMP) configurations, a critical aspect of modern processors’ memory security. Moreover, the module efficiently processes virtual addresses, generating corresponding physical addresses, all while maintaining speculative translation, a feature essential for preserving processor performance during memory access operations.

Signal DescriptionTable 12: Signal Description of PTW

Signal |

IO |

Connection |

Type |

Description |

|---|---|---|---|---|

|

in |

Subsystem |

logic |

Subsystem Clock |

|

in |

Subsystem |

logic |

Asynchronous reset active low |

|

in |

Controller |

logic |

Sfence Committed |

|

out |

MMU |

logic |

Output signal indicating whether the Page Table Walker (PTW) is currently active |

|

out |

MMU |

logic |

Indicating it’s an instruction page table walk or not |

|

out |

MMU |

logic |

Output signal indicating that an error occurred during PTW operation |

|

out |

MMU |

logic |

Output signal indicating that an error occurred during PTW operation at the G stage |

|

out |

MMU |

logic |

Output signal indicating that an error occurred during PTW operation at the G stage during S stage |

|

out |

MMU |

logic |

Output signal indicating that a PMP (Physical Memory Protection) access exception occurred during PTW operation. |

|

in |

CSR RegFile |

logic |

Enables address translation request for instruction |

|

in |

CSR RegFile |

logic |

Enables virtual memory translation for instrucionts |

|

in |

CSR RegFile |

logic |

Enables address translation request for load or store |

|

in |

CSR RegFile |

logic |

Enables virtual memory translation for load or store |

|

in |

CSR RegFile |

logic |

Virtualization mode state |

|

in |

CSR RegFile |

logic |

Virtualization mode state |

|

in |

Store / Load Unit |

logic |

Indicates that Instruction is a hypervisor load store with execute permissions |

|

in |

Store Unit |

logic |

Input signal indicating whether the translation was triggered by a store operation. |

|

in |

Cache Subsystem |

dcache_req_o_t |

D Cache Data Requests |

|

out |

Cache Subsystem / Perf Counter |

dcache_req_u_t |

D Cache Data Response |

|

out |

Shared TLB |

tlb_update_cva6_t |

Updated tag and content of shared TLB |

|

out |

MMU |

logic[VLEN-1:0] |

Updated VADDR from shared TLB |

|

in |

CSR RegFile |

logic [ASIDW-1:0] |

ASID for the lookup |

|

in |

CSR RegFile |

logic [ASIDW-1:0] |

ASID for the lookup for virtual supervisor when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic [VMIDW-1:0] |

VMID for the lookup when Hypervisor extension is enabled |

|

in |

Shared TLB |

logic |

Access request of shared TLB |

|

in |

Shared TLB |

logic |

Indicate shared TLB hit |

|

in |

Shared TLB |

logic[VLEN-1:0] |

Virtual Address from shared TLB |

|

in |

Shared TLB |

logic |

Indicate request to ITLB |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from SATP register |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from VSATP register when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic [PPNW-1:0] |

PPN of top level page table from HGATP register when Hypervisor extension is enabled |

|

in |

CSR RegFile |

logic |

Make Executable Readable bit in xSTATUS CSR register |

|

in |

CSR RegFile |

logic |

Virtual Make Executable Readable bit in xSTATUS CSR register for virtual supervisor when Hypervisor extension is enabled. |

|

out |

OPEN |

logic |

Indicate a shared TLB miss |

|

in |

CSR RegFile |

pmpcfg_t[15:0] |

PMP configuration |

|

in |

CSR RegFile |

logic[15:0][PLEN-3:0] |

PMP Address |

|

out |

MMU |

logic[PLEN-1:0] |

Bad Physical Address in case of access exception |

|

out |

MMU |

logic[GPLEN-1:0] |

Bad Guest Physical Address in case of access exception when Hypervisor is enabled. |

Table 13: D Cache Response Struct (dcache_req_i_t)

Signal |

Type |

Description |

|---|---|---|

|

logic [DCACHE_INDEX_WIDTH-1:0] |

Index of the Dcache Line |

|

logic [DCACHE_TAG_WIDTH-1:0] |

Tag of the Dcache Line |

|

xlen_t |

Data to write in the Dcache |

|

logic [DCACHE_USER_WIDTH-1:0] |

data_wuser |

|

logic |

Data Request |

|

logic |

Data Write enabled |

|

logic [(XLEN/8)-1:0] |

Data Byte enable |

|

logic [1:0] |

Size of data |

|

logic [DCACHE_TID_WIDTH-1:0] |

Data ID |

|

logic |

Kill the D cache request |

|

logic |

Indicate that teh tag is valid |

Table 14: D Cache Request Struct (dcache_req_o_t)

Signal |

Type |

Description |

|---|---|---|

|

logic |

Grant of data is given in response to the data request |

|

logic |

Indicate that data is valid which is sent by D cache |

|

logic [DCACHE_TID_WIDTH-1:0] |

Requested data ID |

|

xlen_t |

Data from D cache |

|

logic [DCACHE_USER_WIDTH-1:0] |

Requested data user |

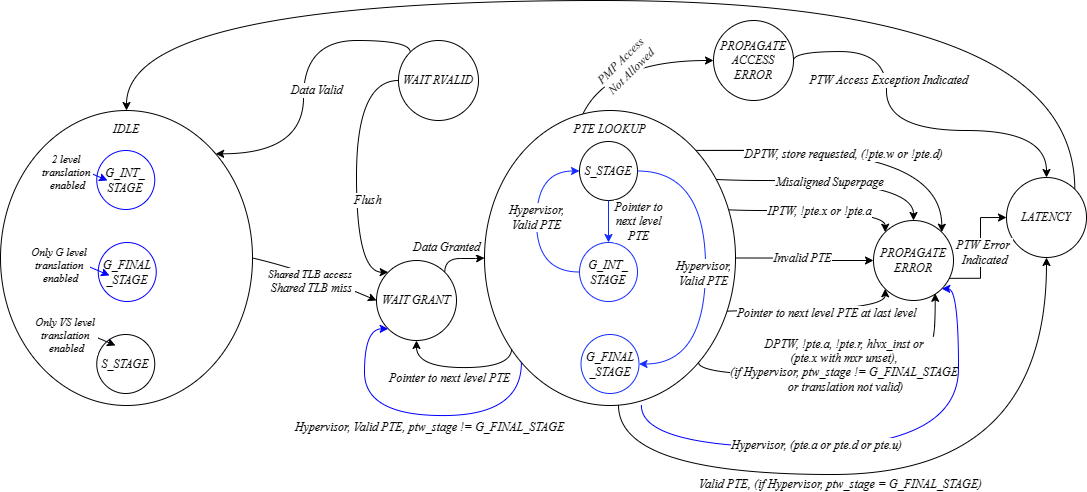

Page Table Walker is implemented as a finite state machine. It listens to shared TLB for incoming translation requests. If there is a shared TLB miss, it saves the virtual address and starts the page table walk. Page table walker transitions between 7 states in CVA6.

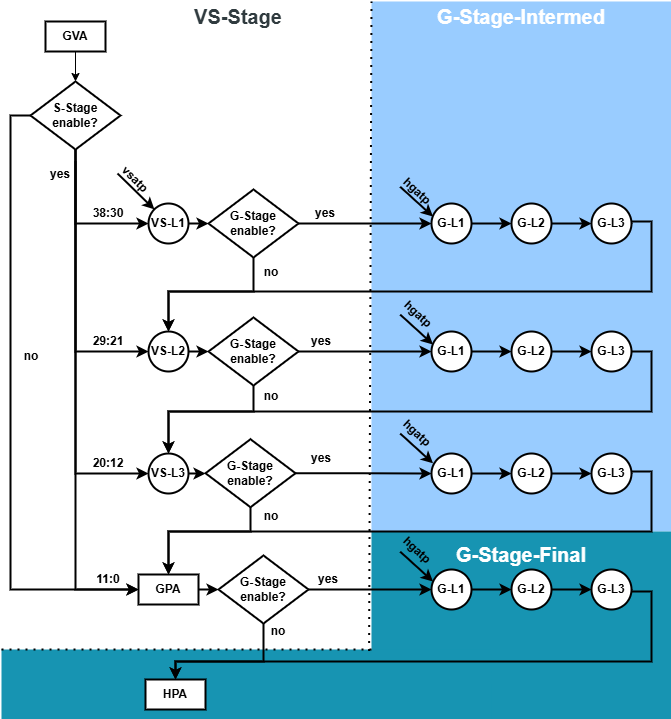

IDLE: The initial state where the PTW is awaiting a trigger, often a Shared TLB miss, to initiate a memory access request. In the case of the Hypervisor extension, the stage to which the translation belongs is determined by the enable_translation_XX and en_ld_st_translation_XX signals. There are 3 possible stages: (i) S-Stage - the PTW current state is translating a guest-virtual address into a guest-physical address; (ii) G-Stage Intermed - the PTW current state is translating memory access made from the VS-Stage during the walk to host-physical address; and (iii) G-Stage Final - the PTW current state is translating the final output address from VS-Stage into a host-physical address. When Hypervisor is not enabled PTW is always in S_STAGE.

WAIT_GRANT: Request memory access and wait for data grant

PTE_LOOKUP: Once granted access, the PTW examines the valid Page Table Entry (PTE), checking attributes to determine the appropriate course of action. Depending on the STAGE determined in the previous state, pptr and other atributes are updated accordingly.

PROPAGATE_ERROR: If the PTE is invalid, this state handles the propagation of an error, often leading to a page-fault exception due to non-compliance with access conditions.

PROPAGATE_ACCESS_ERROR: Propagate access fault if access is not allowed from a PMP perspective

WAIT_RVALID: After processing a PTE, the PTW waits for a valid data signal, indicating that relevant data is ready for further processing.

LATENCY: Introduces a delay to account for synchronization or timing requirements between states.

The next figure shows the state diagram of the PTW FSM. The blue lines correspond to transitions that exist only when hypervisor extension is enabled.

Figure 20: State Machine Diagram of CVA6 PTW

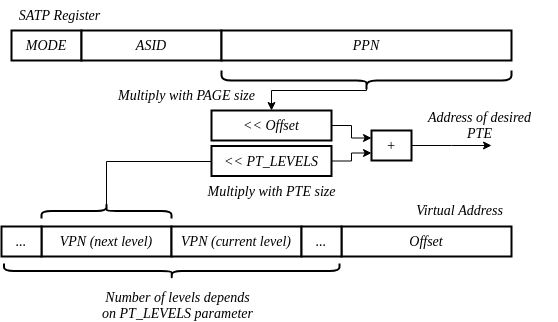

In the IDLE state of the Page Table Walker (PTW) finite state machine, the system awaits a trigger to initiate the page table walk process. This trigger is often prompted by a Shared Translation Lookaside Buffer (TLB) miss, indicating that the required translation is not present in the shared TLB cache. The PTW’s behavior in this state is explained as follows:

The top-most page table is selected for the page table walk. In all configurations, the walk starts at level 0.

In the IDLE state, translations are assumed to be invalid in all addressing spaces.

The signal indicating the instruction page table walk is set to 0.

A conditional check is performed: if there is a shared TLB access request and the entry is not found in the shared TLB (indicating a shared TLB miss), the following steps are executed:

The address of the desired Page Table Entry within the level 0 page table is calculated by multiplying the Physical Page Number (PPN) of the level 0 page table from the SATP register by the page size. This result is then added to the product of the Virtual Page Number, and the size of a page table entry. Depending on the translation indicated by enable_translation_XX, en_ld_st_translation_XX and v_i signals, the corresponding register (satp_ppn_i, vsatp_ppn_i or hgatp_ppn_i) and bits of the VPN are used.

Figure 21: Address of desired PTE at next level of Page Table

WAIT GRANT state

The signal indicating whether it’s an instruction page table walk is updated based on the itlb_req_i signal.

The ASID, VMID and virtual address are saved for the page table walk.

A shared TLB miss is indicated.

In the WAIT_GRANT state of the Page Table Walker’s finite state machine, a data request is sent to retrieve memory information. It waits for a data grant signal from the Dcache controller, remaining in this state until granted. Once granted, it activates a tag valid signal, marking data validity. The state then transitions to “PTE_LOOKUP” for page table entry lookup.

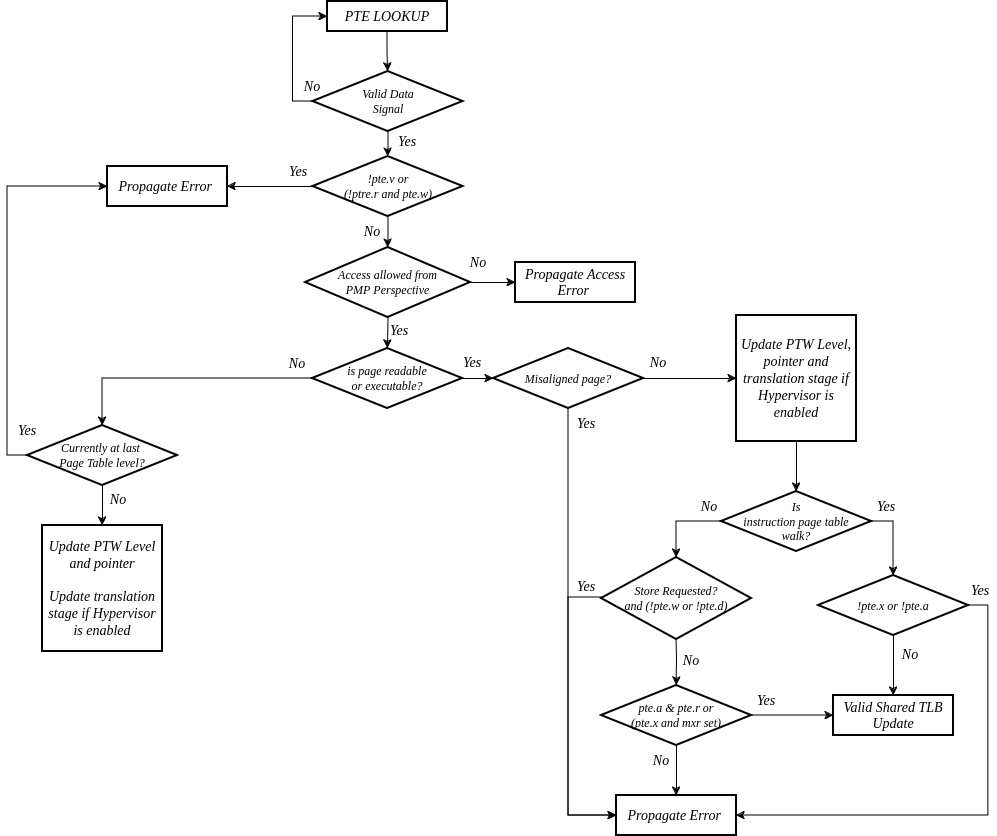

PTE LOOKUP stateIn the PTE_LOOKUP state of the Page Table Walker (PTW) finite state machine, the PTW performs the actual lookup and evaluation of the page table entry (PTE) based on the virtual address translation. The behavior and operations performed in this state are detailed as follows:

The state waits for a valid signal indicating that the data from the memory subsystem, specifically the page table entry, is available for processing.

Upon receiving the valid signal, the PTW proceeds with examining the retrieved page table entry to determine its properties and validity.

The state checks if the global mapping bit in the PTE is set, and if so, sets the global mapping signal to indicate that the translation applies globally across all address spaces.

The state distinguishes between two cases: Invalid PTE and Valid PTE.

If the valid bit of the PTE is not set, or if the PTE has reserved RWX field encodings, it signifies an Invalid PTE. In such cases, the state transitions to the “PROPAGATE_ERROR” state, indicating a page-fault exception due to an invalid translation.

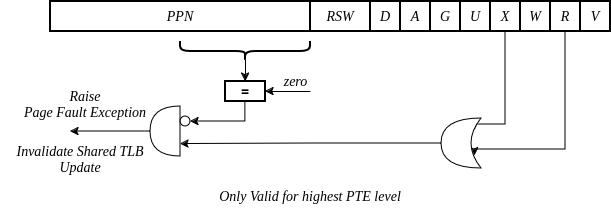

Figure 22: Invalid PTE and reserved RWX encoding leads to page fault

If the PTE is valid, by default, the state advances to the “LATENCY” state, indicating a period of processing latency. Additionally, if the “read” flag (pte.r) or the “execute” flag (pte.x) is set, the PTE is considered valid.

Within the Valid PTE scenario, the ptw_stage is checked to decide the next state. When no Hypervisor Extension is used, the stage is always S_STAGE and has no impact on the progress of the table walk. However, when the Hypervisor Extension is used, if the stage is not the G_FINAL_STAGE, it has to continue advancing the different stages before proceeding with the translation (nested translation). The next diagram shows the evolution of ptw_stage through the nested translation process:

Figure 23: Nested translation: ptw_stage

In this case (valid PTE), the state machine goes back to WAIT_GRANT state. Afterwards, the state performs further checks based on whether the translation is intended for instruction fetching or data access:

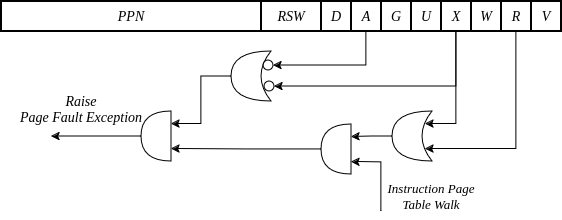

For instruction page table walk, if the page is not executable (pte.x is not set) or not marked as accessible (pte.a is not set), the state transitions to the “PROPAGATE_ERROR” state. Otherwise, the translation is valid. In case that the Hypervisor Extension is enabled, a valid translation requires being in the G_FINAL_STAGE, or the G stage being disabled.

Figure 24: For Instruction Page Table Walk

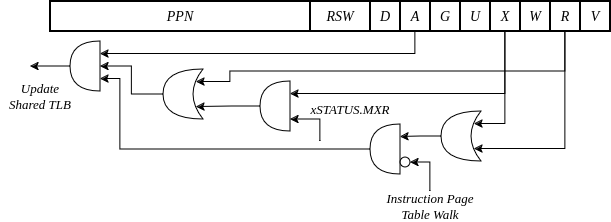

For data page table walk, the state checks if the page is readable (pte.r is set) or if the page is executable only but made readable by setting the MXR bit in xSTATUS CSR register. If either condition is met, it indicates a valid translation. If not, the state transitions to the “PROPAGATE_ERROR” state. When Hypervisor Extension is enabled, a valid translation also requires that it is in the G_FINAL_STAGE or the G stage is not enabled.

Figure 25: Data Access Page Table Walk

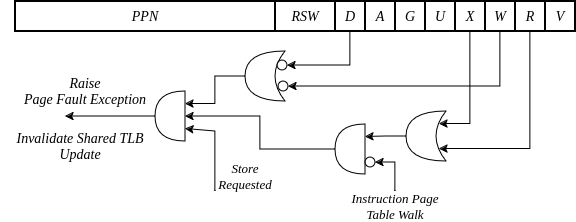

If the access is intended for storing data, additional checks are performed: If the page is not writable (pte.w is not set) or if it is not marked as dirty (pte.d is not set), the state transitions to the “PROPAGATE_ERROR” state.

Figure 26: Data Access Page Table Walk, Store requested

The state also checks for potential misalignment issues in the translation: If the current page table level is the first level and if the PPN in PTE is not zero, it indicates a misaligned superpage, leading to a transition to the “PROPAGATE_ERROR” state.

Figure 27: Misaligned Superpage Check

If the PTE is valid but the page is neither readable nor executable, the PTW recognizes the PTE as a pointer to the next level of the page table, indicating that additional translation information can be found in the referenced page table at a lower level.

If the current page table level is not the last level, the PTW proceeds to switch to the next level page table, updating the next level pointer and calculating the address for the next page table entry using the Physical Page Number from the PTE and the index from virtual address. Depending on the level and ptw_stage, the pptr is updated accordingly.

The state then transitions to the “WAIT_GRANT” state, indicating that the PTW is awaiting the grant signal to proceed with requesting the next level page table entry. If Hypervisor Extension is used and the page has already been accessed, is dirty or is accessible only in user mode, the state goes to PROPAGATE_ERROR.

If the current level is already the last level, an error is flagged, and the state transitions to the “PROPAGATE_ERROR” state, signifying an unexpected situation where the PTW is already at the last level page table.

If the translation access is found to be restricted by the Physical Memory Protection (PMP) settings (allow_access is false), the state updates the shared TLB update signal to indicate that the TLB entry should not be updated. Additionally, the saved address for the page table walk is restored to its previous value, and the state transitions to the “PROPAGATE_ACCESS_ERROR” state.

Lastly, if the data request for the page table entry was granted, the state indicates to the cache subsystem that the tag associated with the data is now valid.

Figure 28: Flow Chart of PTE LOOKUP State

This state indicates a detected error in the page table walk process, and an error signal is asserted to indicate the Page Table Walker’s error condition, triggering a transition to the “LATENCY” state for error signal propagation.

PROPAGATE ACCESS ERROR stateThis state indicates a detected access error in the page table walk process, and an access error signal is asserted to indicate the Page Table Walker’s access error condition, triggering a transition to the “LATENCY” state for access error signal propagation.

WAIT RVALID stateThis state waits until it gets the “read valid” signal, and when it does, it’s ready to start a new page table walk.

LATENCY stateThe LATENCY state introduces a latency period to allow for necessary system actions or signals to stabilize. After the latency period, the FSM transitions back to the IDLE state, indicating that the system is prepared for a new translation request.

Flush ScenarioThe first step when a flush is triggered is to check whether the Page Table Entry (PTE) lookup process is currently in progress. If the PTW (Page Table Walker) module is indeed in the middle of a PTE lookup operation, the code then proceeds to evaluate a specific aspect of this operation.

Check for Data Validity (rvalid): Within the PTE lookup operation, it’s important to ensure that the data being used for the translation is valid. In other words, the code checks whether the “rvalid” signal (which likely indicates the validity of the data) is not active. If the data is not yet valid, it implies that the PTW module is waiting for the data to become valid before completing the lookup. In such a case, the code takes appropriate action to wait for the data to become valid before proceeding further.

Check for Waiting on Grant: The second condition the code checks for during a flush scenario is whether the PTW module is currently waiting for a “grant.” This “grant” signal is typically used to indicate permission or authorization to proceed with an operation. If the PTW module is indeed in a state of waiting for this grant signal, it implies that it requires authorization before continuing its task.

Waiting for Grant: If the PTW module is in a state of waiting for the grant signal, the code ensures that it continues to wait for the grant signal to be asserted before proceeding further.

Return to Idle State if Neither Condition is Met: After evaluating the above two conditions, the code determines whether either of these conditions is true. If neither of these conditions applies, it suggests that the PTW module can return to its idle state, indicating that it can continue normal operations without any dependencies on the flush condition.